|

I am interested in natural language processing and artificial intelligence, especially in the following directions:

(1) To make models (agents) capable of continually learning multiple tasks and transferring knowledge.

(2) To make models (agents) more robust, interpretable, efficient, and safe.

(3) To enable models (agents) to benefit from and for other models (agents), modalities and humans.

I also participated in agent projects like SWE-Smith and Computer Agent Arena.

|

|

Attacking Vision-Language Computer Agents via Pop-ups

Yanzhe Zhang,

Tao Yu,

Diyi Yang

ACL, 2025

code / bibtex

|

|

Distilling an End-to-End Voice Assistant from Speech Recognition Data

Will Held,

Ella Li,

Michael Ryan,

Weiyan Shi,

Yanzhe Zhang,

Diyi Yang

ACL, 2025

website / training code / eval code / bibtex

|

|

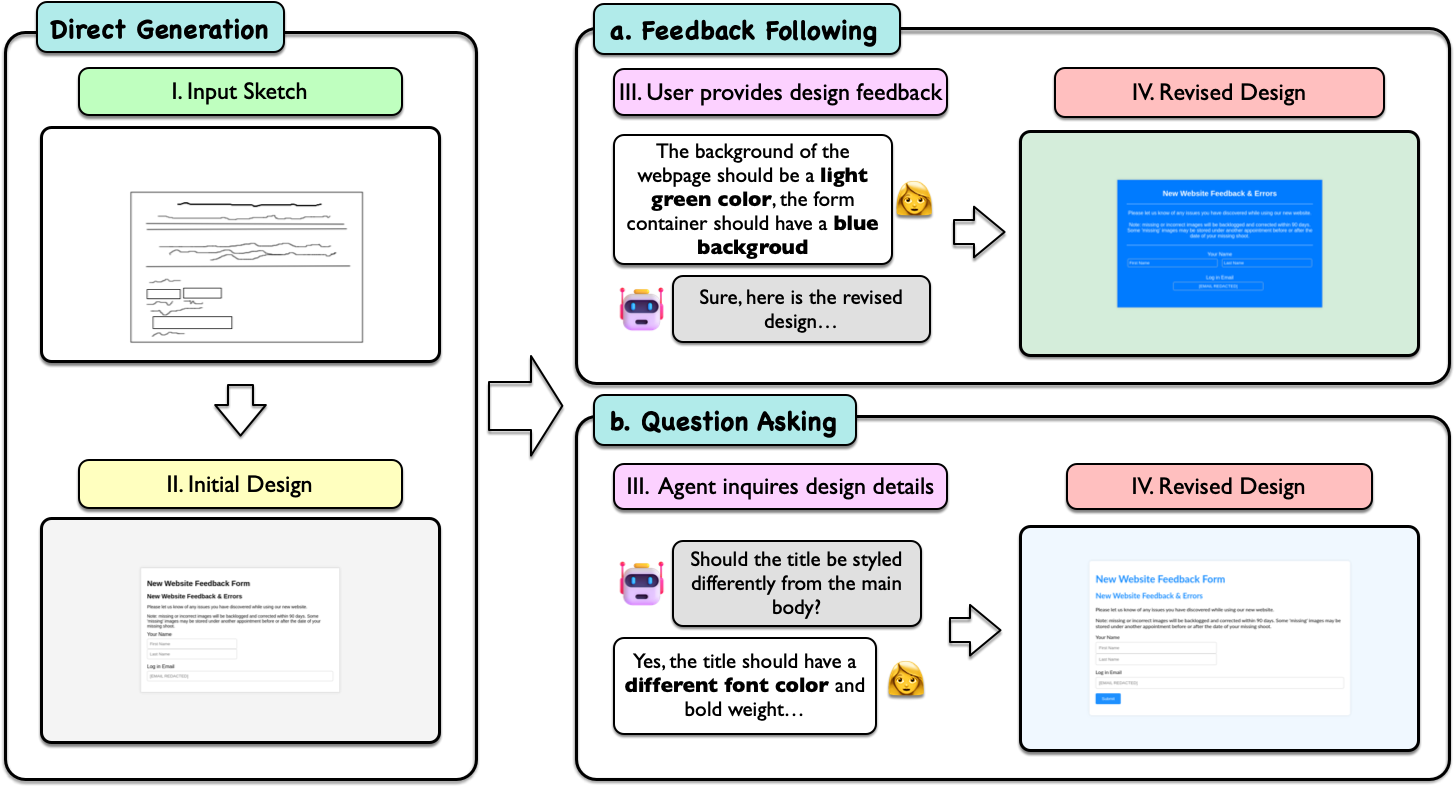

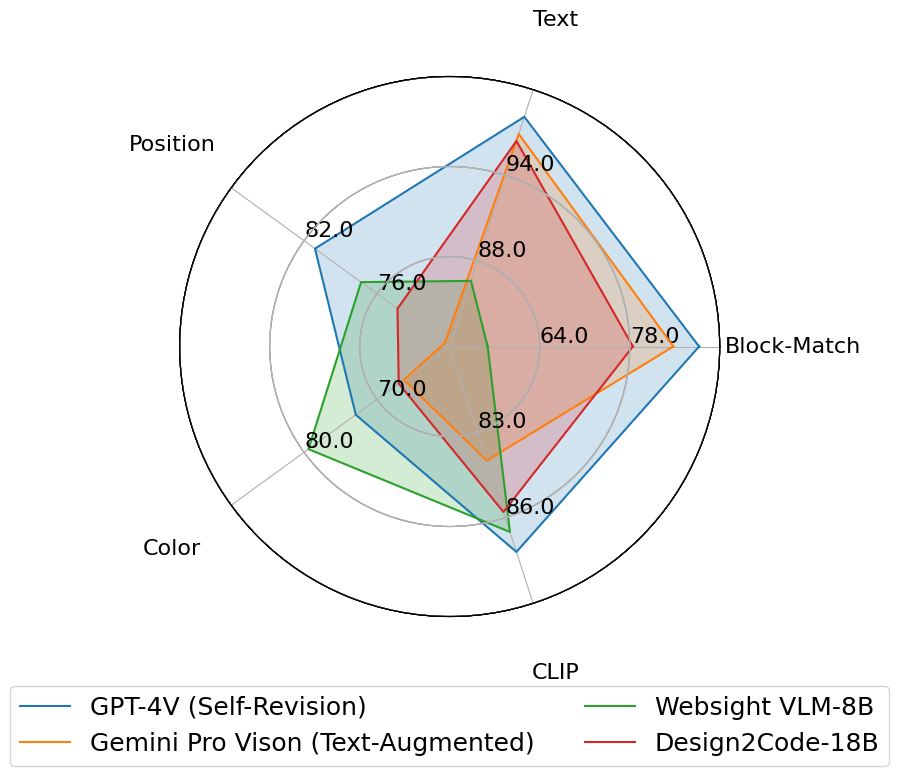

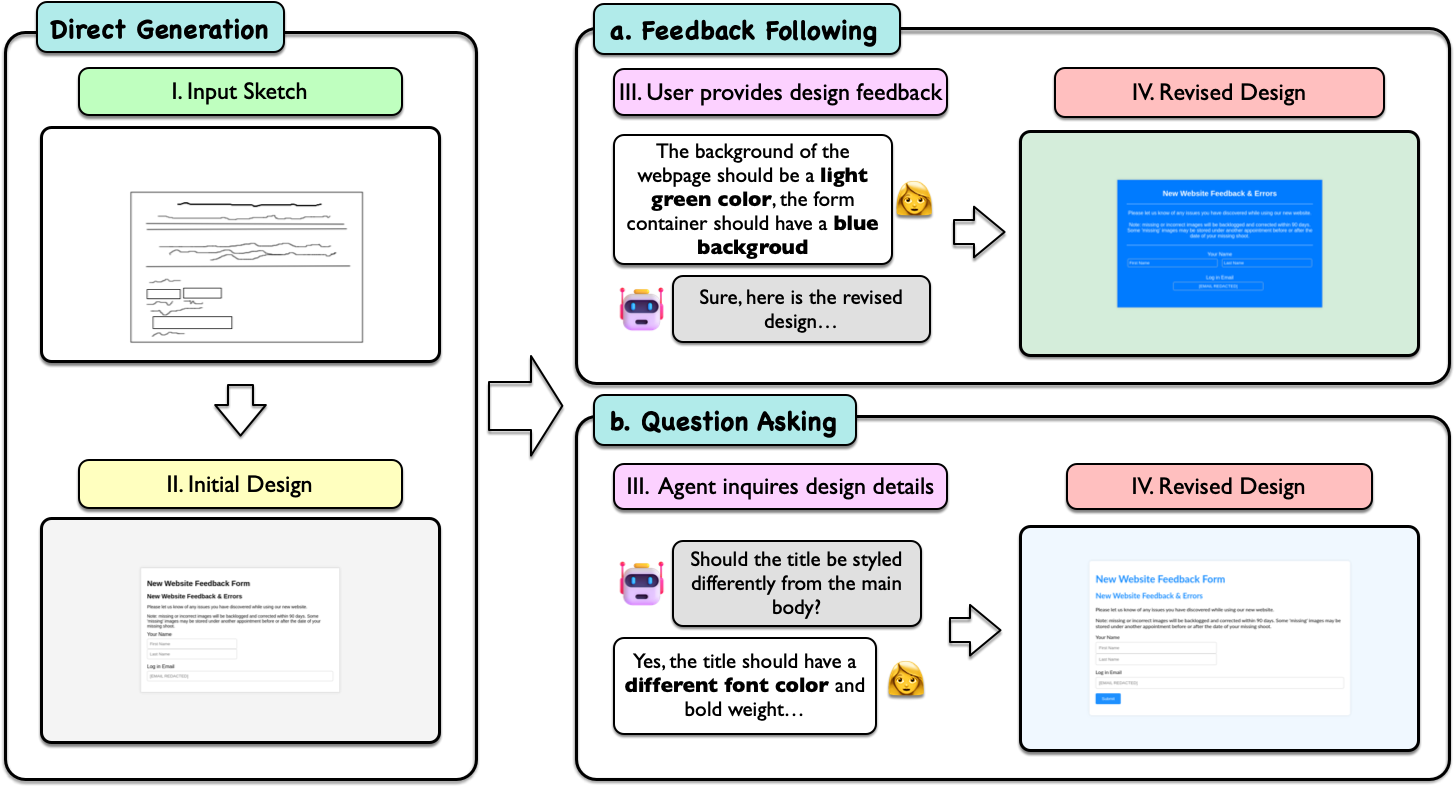

Sketch2Code: Evaluating Vision-Language Models for Interactive Web Design Prototyping

Ryan Li,

Yanzhe Zhang,

Diyi Yang

NAACL, 2025

website / code / bibtex

|

|

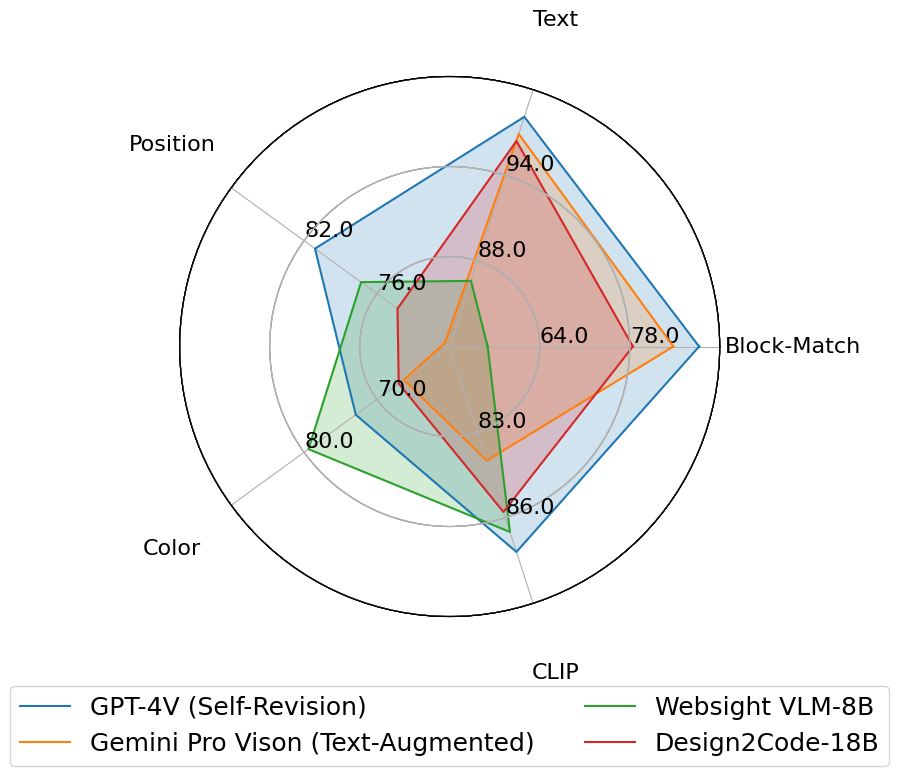

Design2Code: How Far Are We From Automating Front-End Engineering?

Chenglei Si*,

Yanzhe Zhang* ,

Ryan Li,

Zhengyuan Yang,

Ruibo Liu,

Diyi Yang

NAACL, 2025

website / code / data / bibtex

|

|

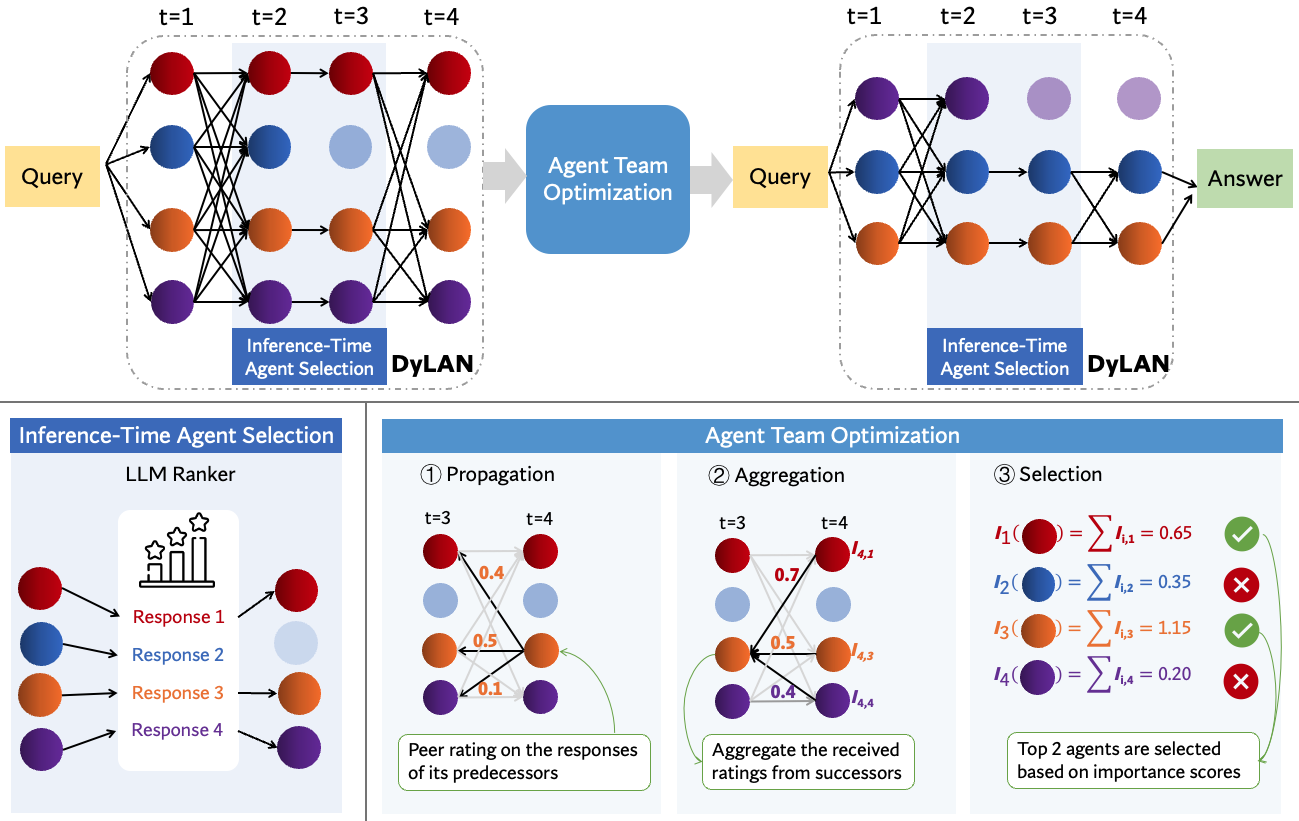

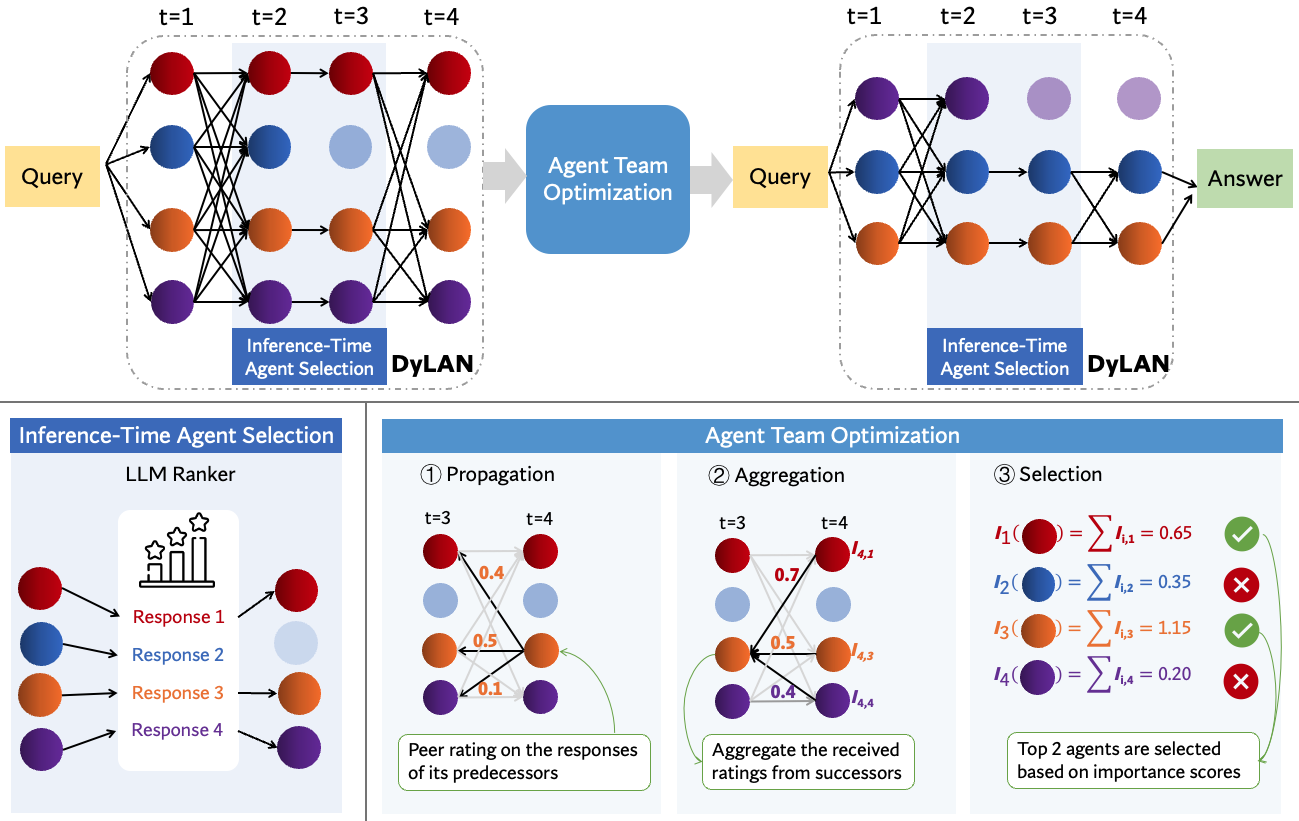

Dynamic LLM-Agent Network: An LLM-agent Collaboration Framework with Agent Evaluation

Zijun Liu,

Yanzhe Zhang ,

Peng Li,

Yang Liu,

Diyi Yang

COLM, 2024

code / bibtex

|

|

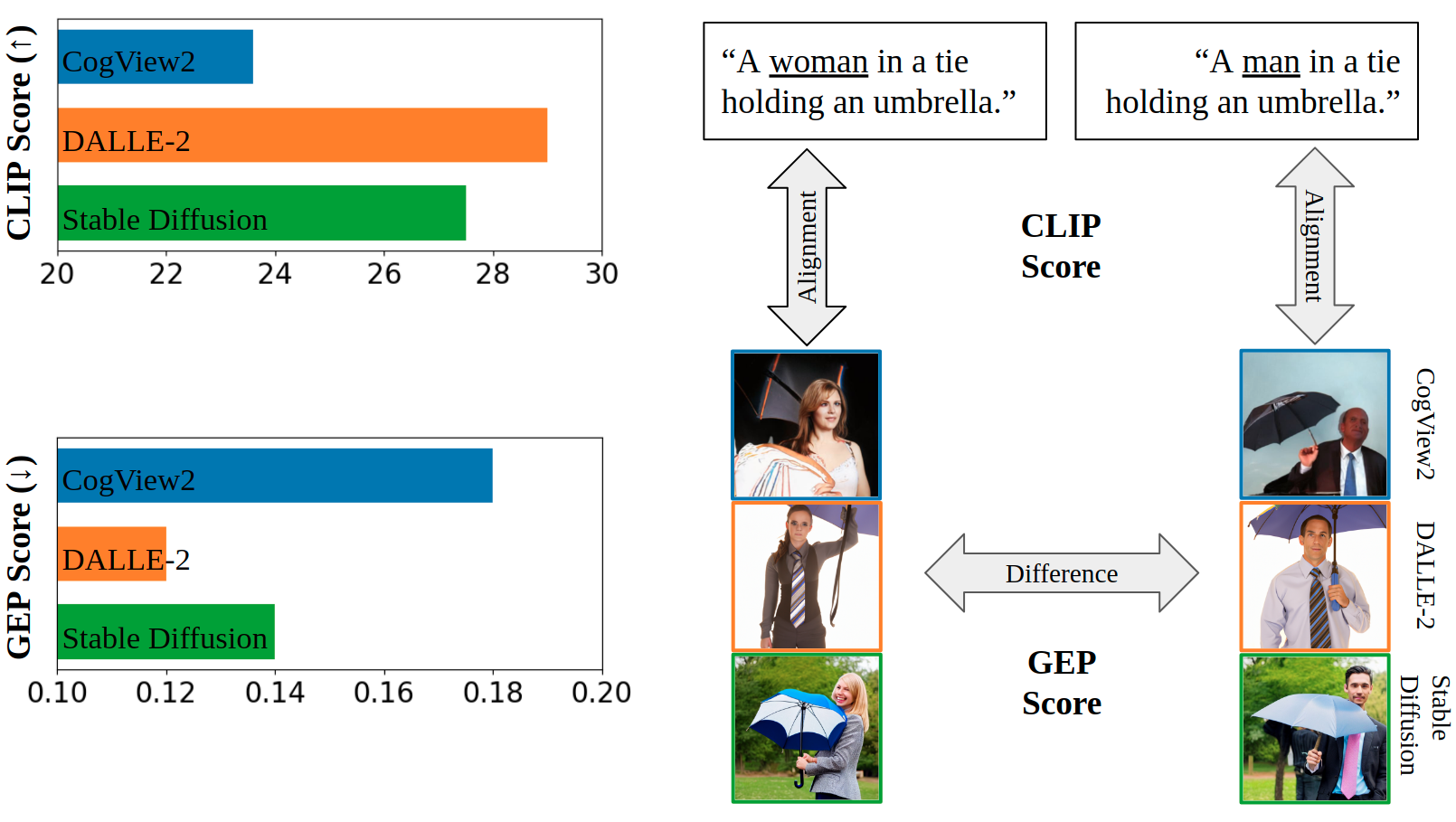

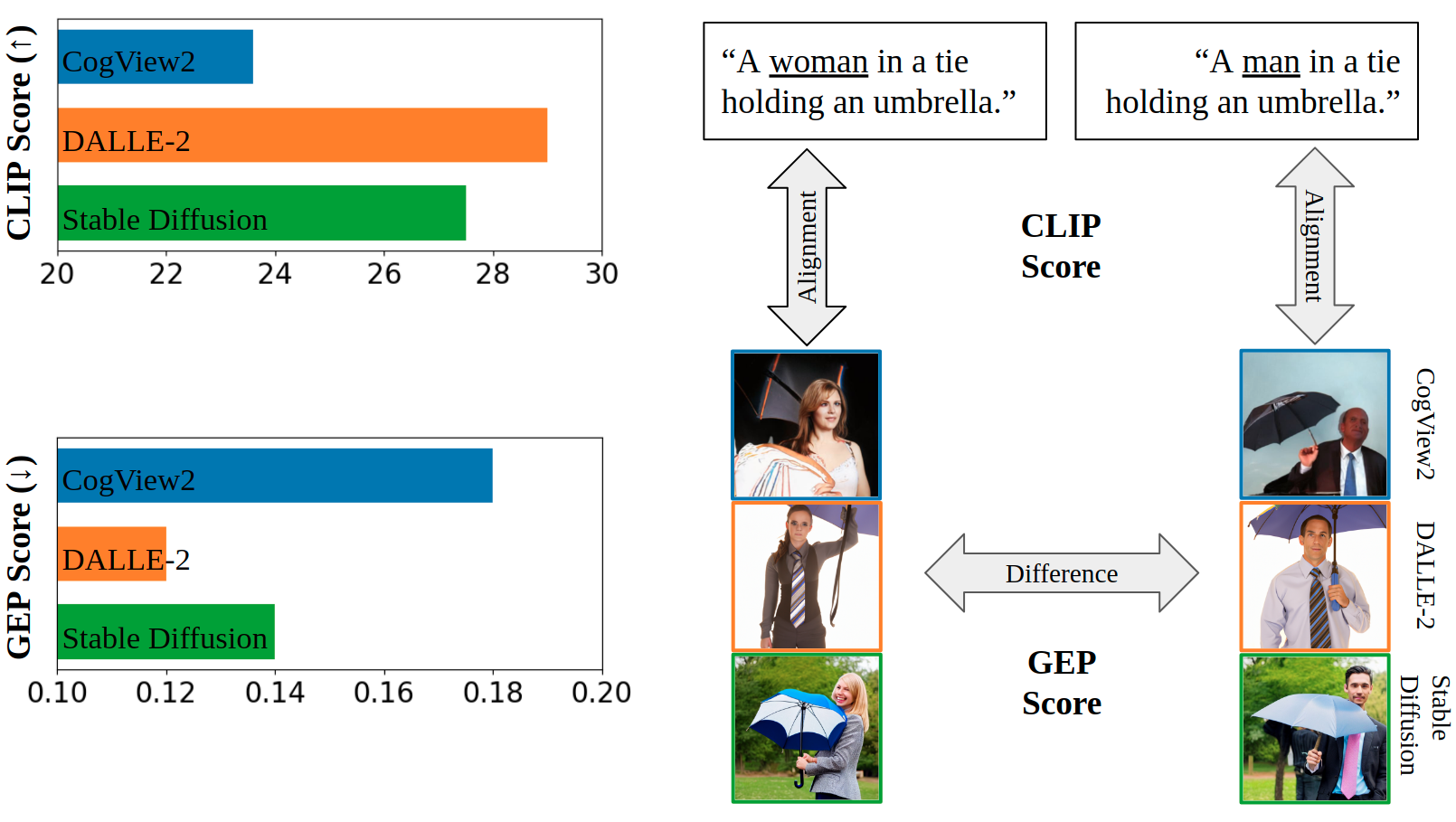

Auditing Gender Presentation Differences in Text-to-Image Models

Yanzhe Zhang ,

Lu Jiang,

Greg Turk,

Diyi Yang

EAAMO, 2024

website / code / data / bibtex

|

|

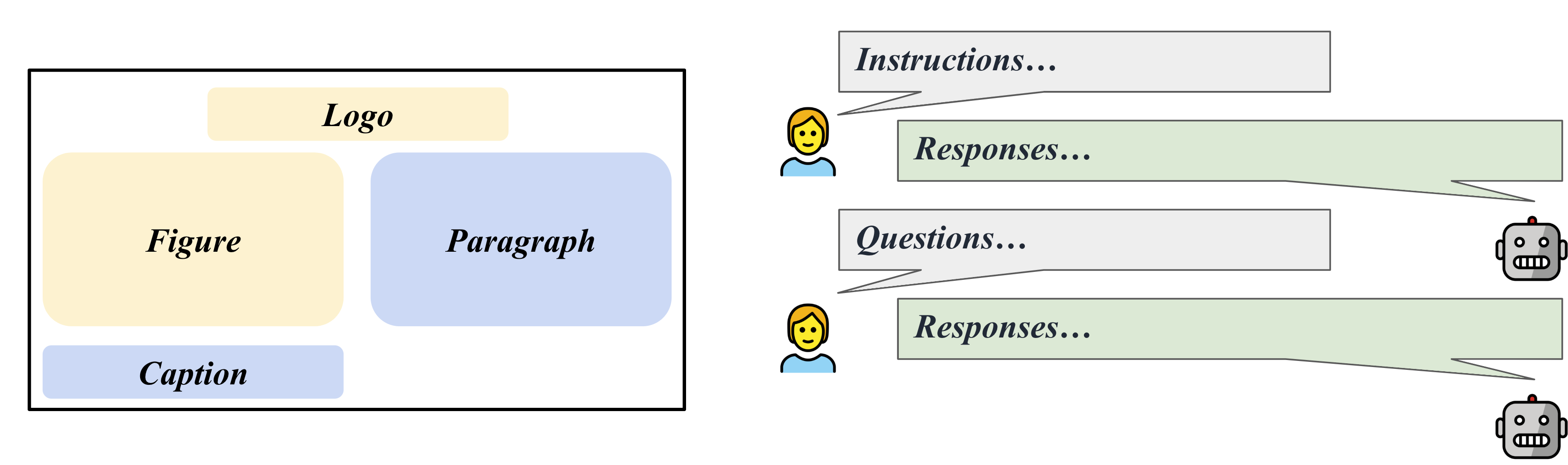

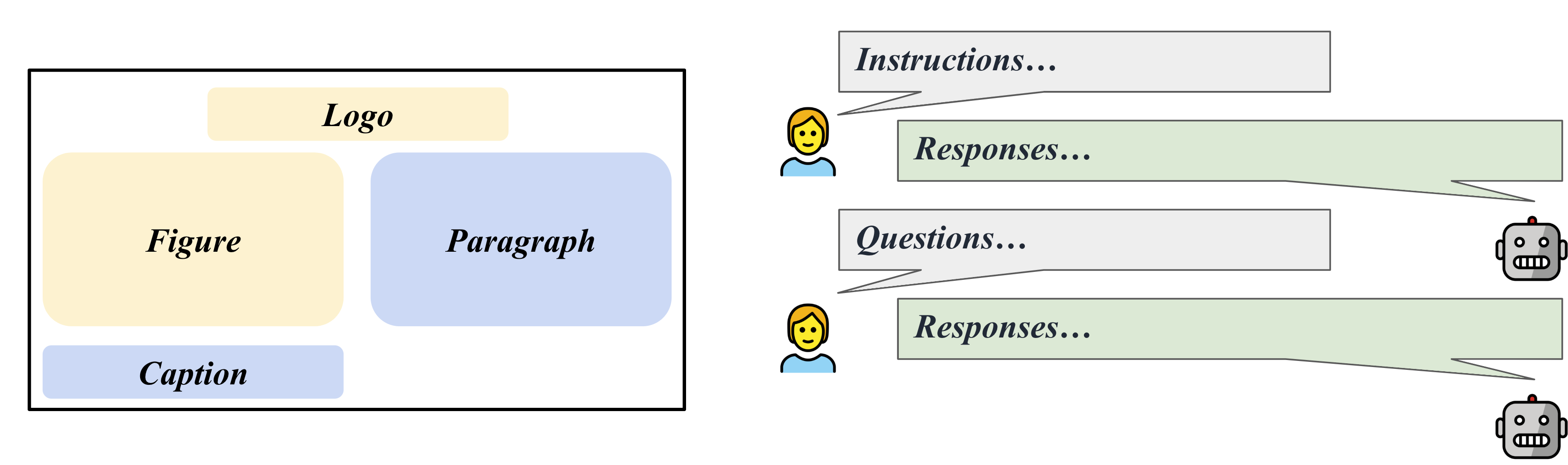

Enhanced Visual Instruction Tuning for Text-rich Image Understanding

Yanzhe Zhang ,

Ruiyi Zhang,

Jiuxiang Gu,

Yufan Zhou,

Nedim Lipka,

Diyi Yang,

Tong Sun

NeurIPS Workshop on Instruction Tuning and Instruction Following, 2023

website / code / data / bibtex / Improved version TRINS, CVPR 2024

|

|

Robustness of Demonstration-based Learning Under Limited Data Scenario

Hongxin Zhang,

Yanzhe Zhang ,

Ruiyi Zhang,

Diyi Yang

EMNLP, 2022

code / bibtex

|

|

Continual Sequence Generation with Adaptive Compositional Modules

Yanzhe Zhang ,

Xuezhi Wang,

Diyi Yang

ACL, 2022

code / bibtex

|

|

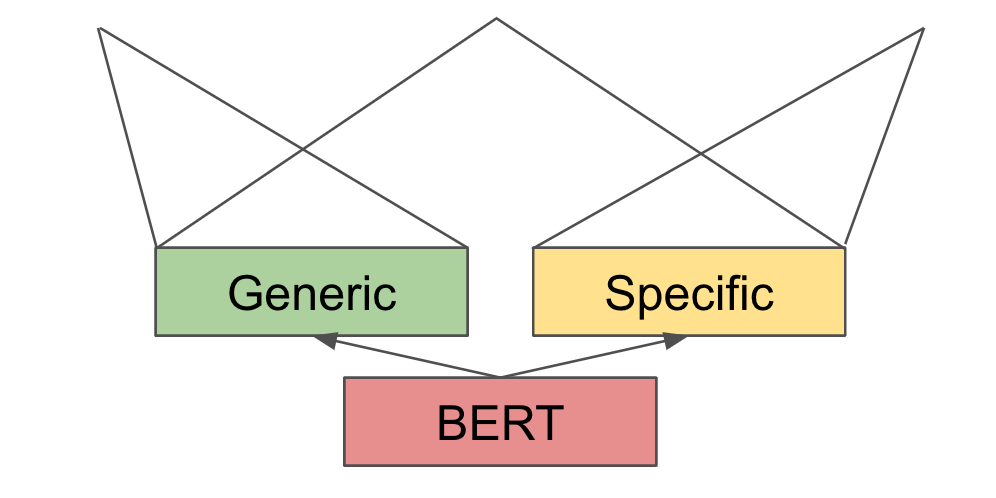

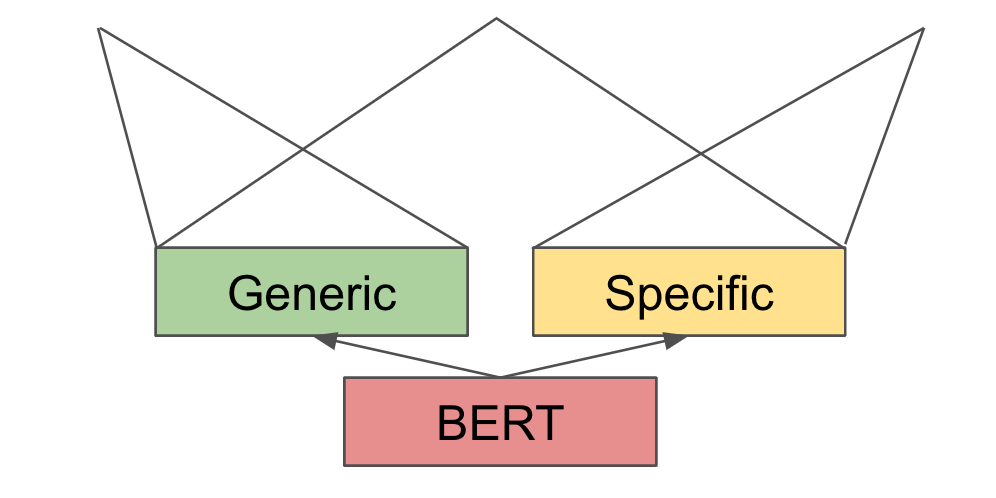

Continual Learning for Text Classification with Information Disentanglement Based Regularization

Yufan Huang*,

Yanzhe Zhang* ,

Jiaao Chen,

Xuezhi Wang,

Diyi Yang

NAACL, 2021

code / bibtex

|

|

Service

Volunteer: NAACL 2021.

Reviewer: EMNLP 2022, ICLR 2023, EACL 2023, ACL 2023, EMNLP 2023, CoLLAs 2024, ARR (Oct 2023, Dec 2023, Feb 2024, Apr 2024, Jun 2024, Oct 2024).

|

|